Multidimensional AI

Secure, Responsible, & Trusted AI

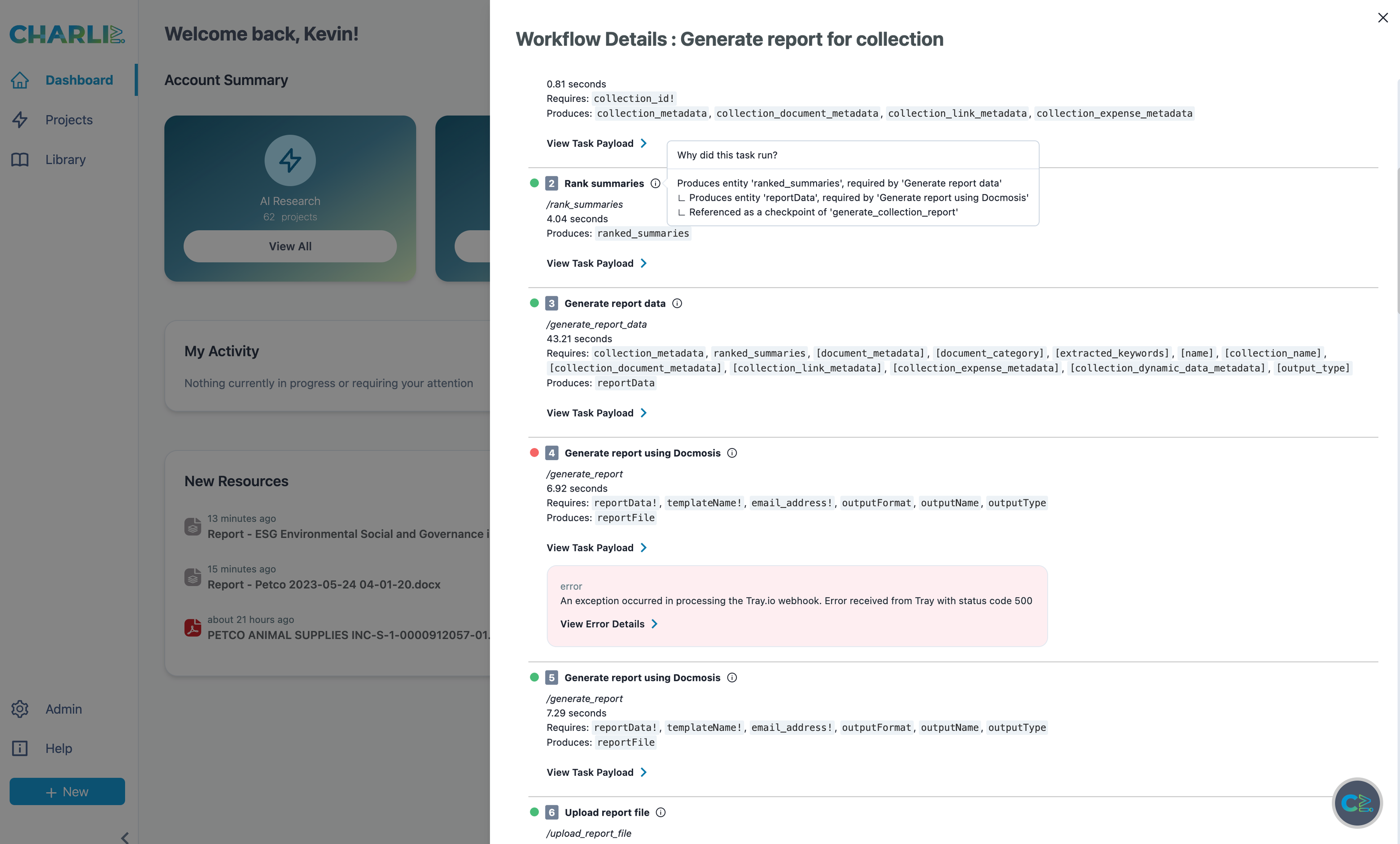

End-to-end visibility – like the enterprise expects

Multidimensional AI was designed with end-to-end traceability and visibility built right in. It is the first True AI for the enterprise – an platform that is an AI ensemble that employs multiple models to create a robust, accurate, and traceable output.

There is a tremendous amount of transparency built-in to Multidimensional AI on all decision flows including: why decision points were invoked, the inputs to decision points, the results from decision points, and even visibility into errors and exceptions.

Multidimensional AI addresses quality assurance accuracy

Continuous training and testing

We continuously train and test all models in production and incorporate an AI Test Framework that alerts to discrepancies and issues in newly trained models instances.This constant training ensures accuracy and visibility.

Feedback loops to scale AI and ensure veracity

Multidimensional AI incorporates both types of feedback loops including human-based interfaces for reviewing model decisions and output and system-based feedback loops that are specifically trained.

Automated Acceptance Testing

This allows internal QA and external customers to perform accuracy and quality checks on decision flows and the results that come from it. This independent verification is another feedback loop for training and testing.

Secure by design

Multidimensional AI was built to operate in highly secure environments – the Active Decision Flow Controls provide deep-level secure checkpoints for regulatory and compliance requirements. This allows us to tap into confidential information.

The Charli AI Multidimensional AI can leverage its network of AI models to deliver fact-checked outcomes

Accuracy and fact-check analysis is built right into our platform. Charli AI incorporates a suite of “challenger” models that are trained to verify the accuracy of any information produced by the AI generated outcomes. Fact check analysis is needed to mitigate risks on hallucinations and to address limitations in LLMs and other AI models.

Fact check analysis performs cross checks using extracted methods as well as relevancy checks to ensure that hallucinations can be identified, flagged and redlined and reports on inaccuracies on factual statements and numbers. Why does this matter? Factual and relevant outputs are crucial to highly-regulated industries as well as the future of regulations in and of AI.